Starting this semester, St. Joe’s faculty are expected to include a statement on the use of artificial intelligence (AI) in their course syllabi, according to a Dec. 4 email to faculty from the Office of Teaching and Learning (OTL).

With the recent growth of generative AI technologies’ usage, universities across the country have implemented new policies, which have prompted debates as to how and when generative AI programs, like ChatGPT and Perplexity AI, should be permitted in classrooms, if at all.

A 2024 Harvard study, Teen and Young Adult Perspectives on Generative AI, found that just over half of young people ages 14 to 22 have used generative AI at some point in their lives, with 4% indicating they use AI daily. The report also examined the most common uses of AI, finding that 53% of AI users use it to gather information, 51% use it to brainstorm and 46% use it for schoolwork help.

Young people also are excited by the potential ways AI helps them access information for both work and school, and in regards to schoolwork, teens want adults to know they are using AI “as a regular part of their schoolwork and learning, including — but not only — for cheating,” according to the study.

In response to this greater awareness and use of AI, OTL has implemented a multi-year strategy to increase student and faculty knowledge of AI and to make expectations regarding AI use clearer, said Aubrey Wang, Ph.D., professor of educational leadership, counseling and social work and interim director of OTL.

Wang said it is important for both students and faculty to practice critical thinking, communication, teamwork skills and AI literacy as AI continues to evolve.

“We’re trying to develop students’ awareness of the ethics around AI and the fact that a lot of AI-generated content might have hallucination built in, they might have bias, and one just cannot use the AI-generated content as it is,” Wang said. “It really requires humans to take another look at it, to evaluate it, to compare it and learn from it.”

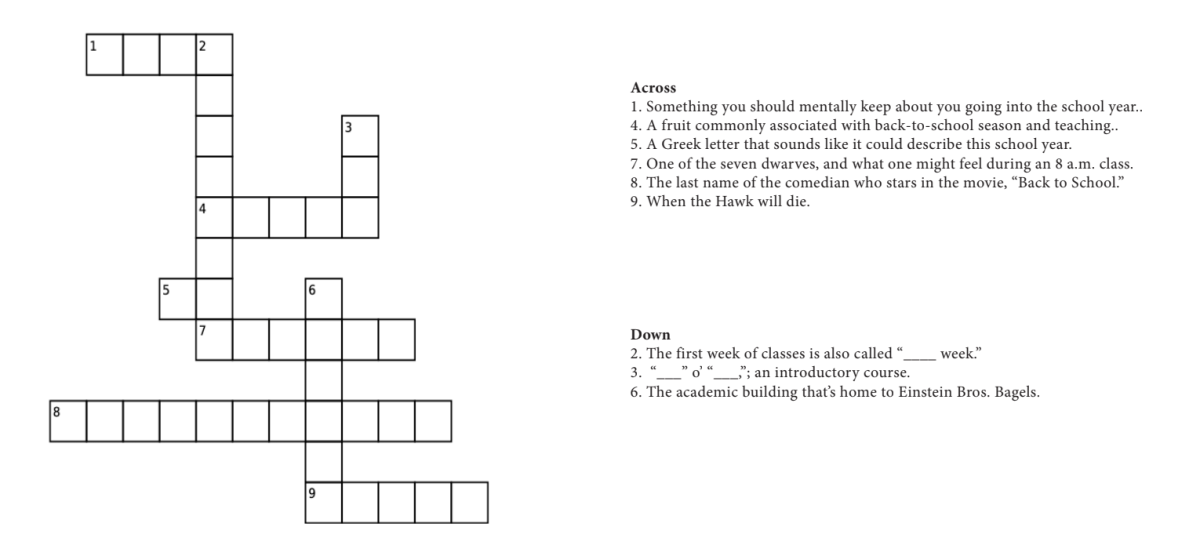

Although it is expected that all faculty include a statement regarding AI use on their syllabi, Wang said faculty are free to use their discretion in creating AI policies for their specific courses. OTL recommends three potential approaches to AI syllabus statements: restrictive, prescriptive or permissive.

The restrictive approach prohibits use of AI entirely. The prescriptive approach allows use of AI in some forms but not in others (for example, allowing a student to use AI to check grammar and spelling, but not to write full responses for them). The permissive approach allows full use of AI and may involve the required use of AI to complete specific tasks or learn certain skills. A professor may apply any of these approaches depending on their course’s objectives and learning goals, Wang said.

Some professors, such as Richard Haslam, Ph.D., associate professor of English, have applied a more restrictive approach to AI use. Haslam prohibits use of “machine learning applications of any kind” for his courses, and all assignments are completed in class, on paper.

Haslam first implemented this policy in his syllabi in the spring 2023 semester. He said machine learning applications can interfere with students’ reading, writing, listening and speaking skills, as well as interfere with the university’s mission statement.

“In my own particular field, I don’t see any good coming from it,” Haslam said. “One other thing that’s mentioned in the mission statement is a rigorous education. I think that it interferes with a rigorous education, and it interferes with thinking critically, those two parts of the mission statement.”

While Haslam is opposed to AI use in his field of literary studies, he recognizes that other disciplines may differ in their approaches.

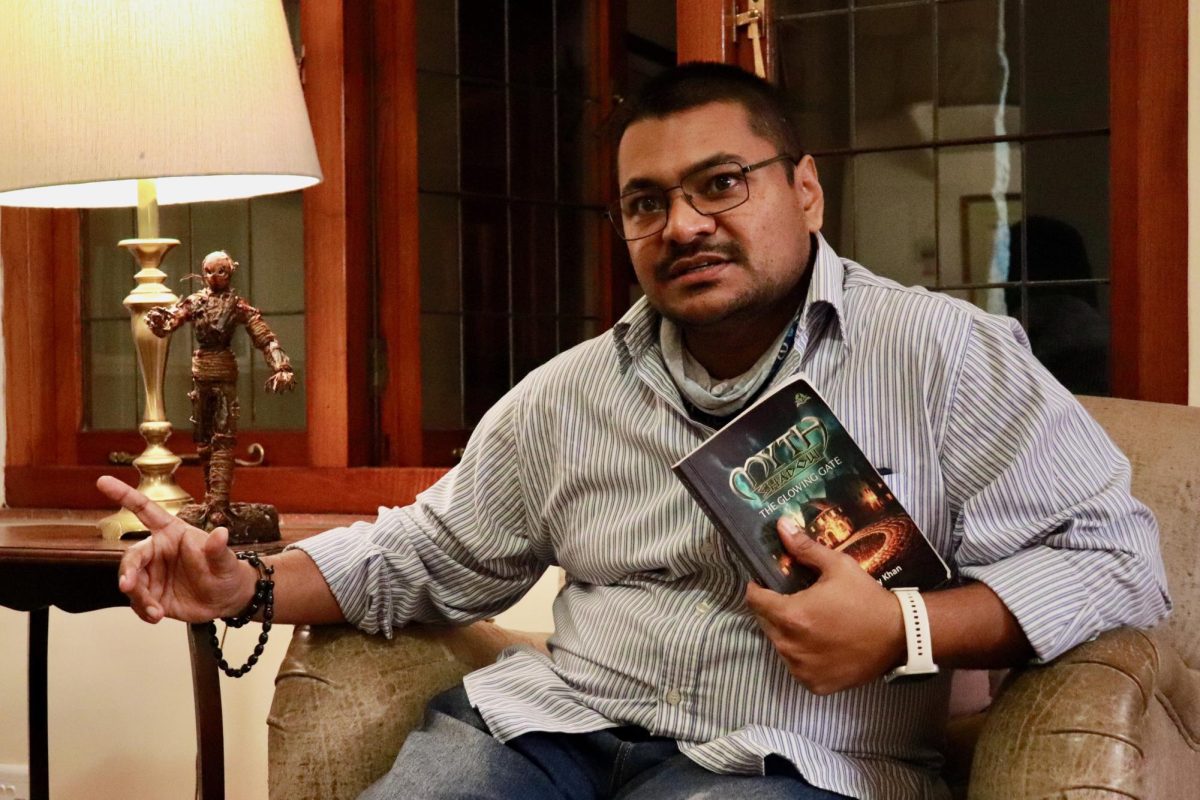

Ruben Mendoza, Ph.D., associate professor of decision and system sciences, has employed a more permissive approach when writing his AI policy. Mendoza said he encourages students to use AI as a tool for research.

“The syllabus says, ‘Use it. Use it all the time. Look it up,’” Mendoza said. “In the end, for me, for my course, for my assignments, it won’t matter. It can only help you.”

Mendoza said AI can be either a valuable tool or a potential drawback, depending on how it is used.

“I don’t see the tool in and of itself as being a problem,” Mendoza said. “It can be a problem, but the problem is created by how the student uses it.”

Many students are navigating appropriate ways to use AI throughout their courses. Sean Parchesky ’27, a finance major, said he doesn’t use AI to do his work for him but does use it to check his work, particularly for math problems. He said the way AI is used is what truly matters, as its impact depends directly on the approach.

“I think using the right context, it could definitely be a good tool to help with learning,” Parchesky said. “But I also feel like a lot of people are abusing it already, so it makes a tricky situation.”

Wang said implementing clearer AI policies in syllabi will help faculty prepare students for their chosen fields as more industries begin to use AI technologies.

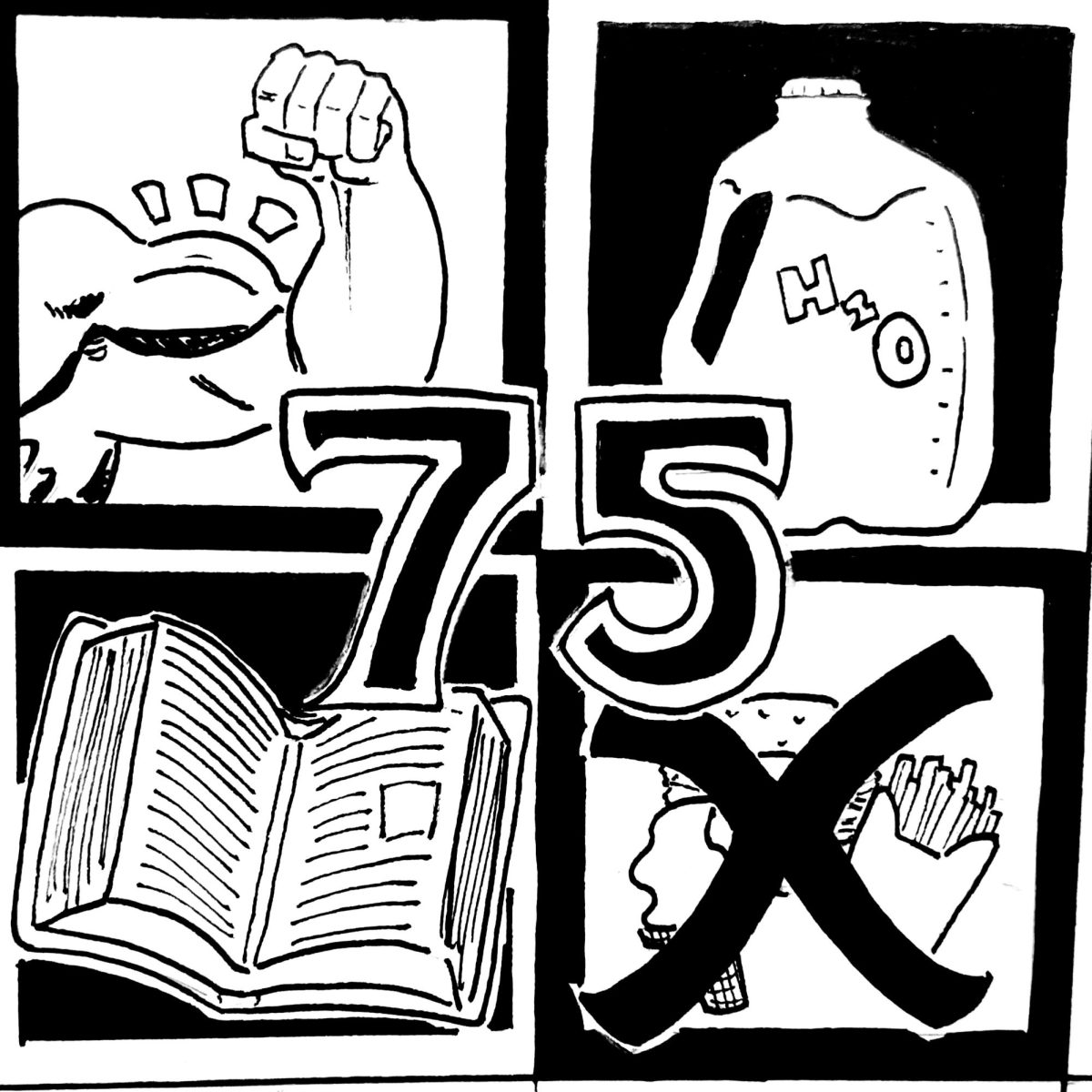

75 faculty members were able to complete an asynchronous course on teaching with AI during the summer of 2024, Wang said, and in the fall of 2024, OTL developed a guide for the use of AI in higher education, available to the St. Joe’s community.

“AI is constantly evolving, and I think the dialogue around it is certainly evolving as well,” Wang said. “Through the multi-year strategic plan that we have, our goal is making sure that our faculty are learning their foundational concepts around AI and using AI in teaching and then, based on their discipline and their learning objectives, choose the best policies that suit them.”